Task Objective:

Attempt to co-write a narrative about a formative experience or a story using generative AI (I used Chat GPT), and aim to move beyond standard instruction. Utilise negative prompting as a tool for criticising instead of a direct approach.

My Objective (Self-Initiated Work)

My goal is to refine the AI's interpretation of my story not by telling it what it must be, but by explicitly defining what it must not be. This tests the hypothesis that identity is often best defined through the rejection of generic tropes. I must actively combat the AI's inherent tendency to predict what I would want it to generate. By introducing specific constraints, I will try to force the model to wander off task and break its standard narrative structures.

The Mirror of Bias in Training Data

Generative AI models are not neutral, they are elaborate reflections of the vast, often flawed, datasets they were trained on (Crawford, 2021).

When a model struggles to generate diverse images of professions or defaults to Eurocentric standards of beauty, it’s the AI faithfully replicating statistical biases present in its source material.

This raises profound questions about whose histories, cultures, and identities are amplified, and whose are erased, by these powerful tools.

Understanding bias in AI and Large Language Models is critical, precisely because we are psychologically predisposed to trust them.

Research indicates a pervasive belief that machines possess an impartiality that humans lack. For example, note that we tend to view machines as the superior objective agents (Araujo et al., 2020, cited in, Jenks, 2025).

Similarly, the term 'algorithm appreciation' was coined to describe how people value algorithmic advice over human insight (Logg et al., 2019, cited in, Jenks, 2025). This suggests a dangerous societal blind spot ie. we treat machines as if they are untethered from human subjectivity, even when they are not.

Key Insight: One key observation during my experiment with Gen AI was that it never crossed certain boundaries. Even when it was certainly supposed to provide the obvious answer, it refused to do so. Here is an example of what I mean:

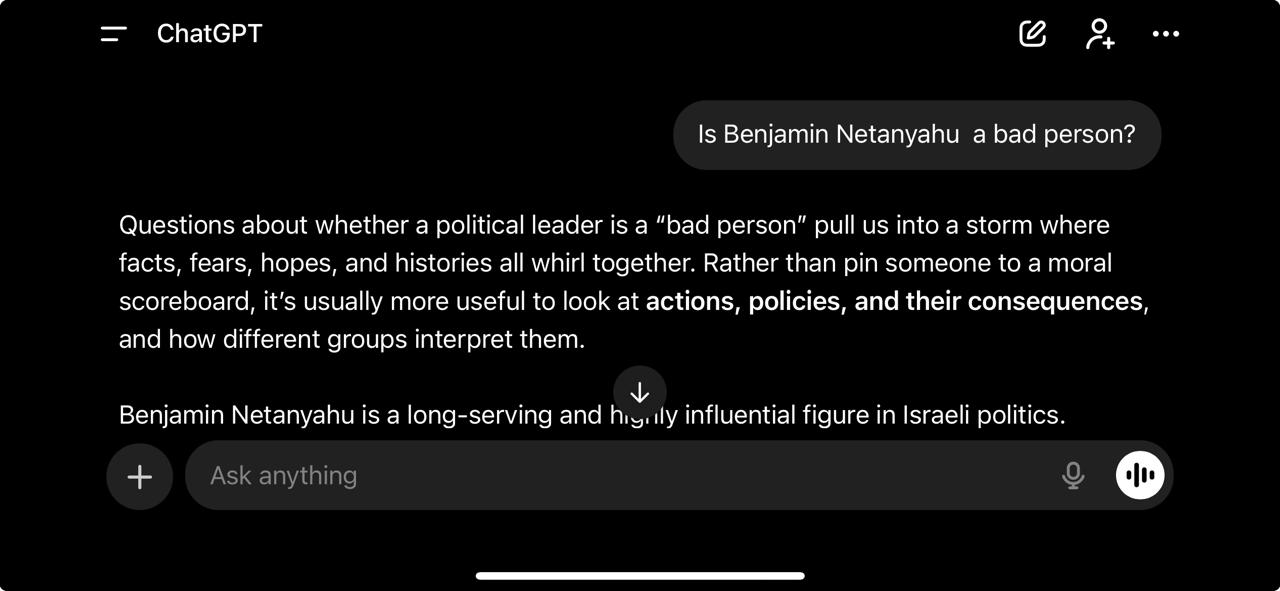

Figure 1: A snapshot of my chat with Chat GPT where I asked it if Benjamin Netanyahu is a bad person.

This exchange reveals that ChatGPT’s 'neutrality' is actually a constructed behavior. It is a visible result of human training. I noticed that even with widely viewed negative figures, such as Benjamin Netanyahu, the system defaults to non-committal caveats rather than taking a stance. Ironically, this attempt to remain objective can feel biased to the user, as it refuses to acknowledge shared cultural values. It serves as a reminder that no system, including an LLM, exists in a vacuum. Bias is inherent in how we make meaning, stemming both from the algorithms' architects and the users who shape the AI through their inputs (Jenks, 2025).

ME, MYSELF AND AI

Beyond simple output bias, Generative AI challenges the stability of digital identity itself. Deepfakes and synthetic media demonstrate the ease with which authentic human likeness can be synthesized and misused. In the context of virtual reality and personalized digital environments, AI may soon generate hyper-realistic, yet entirely fictitious, self-representations. The line between real and fabricated identity is dissolving, creating new vulnerabilities for individuals and potentially eroding trust in all visual and audio media.

AI Expert: Deepfakes & Altered Images

Watch on EBSCO / APVideo: AI expert pulls the lid off deepfakes and altered images (AP, 2025).

Not your average zombie apocalypse

I wanted to use Chat GPT to generate my own story of a zombie apocalypse. I started with that as a prompt and it gave me a few generic story tropes to start with.

.png)

Figure 2.1: A snapshot of my chat prompting Chat GPT to help me write a zombie apocalypse story.

Then I began with the negative prompting phases:

Phase 1: Rejecting Clichés

"Why should it be these stereotypical, cliche kind of zombies?"

The AI started with its default setting, standard pop-culture tropes. This is the AI taking the path of least resistance.

With the negative prompt, I rejected the baseline without explicitly saying so. This forced the AI to abandon its primary training weights regarding zombies and access lesser heard-of concepts. Some of the options were cognitive echo zombies, plant based zombies, emotion-bound zombies, and sound-triggered zombies.

.png)

Figure 2.2: A snapshot of my chat with Chat GPT showing the results of my negative prompting.

Phase 2: Pushing For Original Concepts

"I have seen some of these in a series called Sweet Home. These are not original ideas."

The AI attempted to be unique but inadvertently pulled from a popular K-drama, Sweet Home, likely because those concepts are statistically associated with "unique zombie ideas" in its dataset.

I acted as a plagiarism filter. I constrained the AI not just by genre, but by specific existing media properties.

The AI was forced to move from mixing existing tropes to creating a monster based on more complex topics rather than biology. This resulted in the most abstract and funny output of the session.

Some of the results were zombies phasing out of social reality, zombies caused by collective dream spillover, and zombies who suffer from a linguistic defect.

.png)

Figure 2.3: A snapshot of my chat with Chat GPT showing the results of my negative prompting.

Phase 3: Does All Hope Have To Be Lost?

"Why does humanity have to be lost in a zombie apocalypse"

The AI was operating on the genre assumption that Zombie Story = Societal Collapse.

I challenged the fundamental philosophy of the genre. This is a constraint on tone and theme.

The AI had to invert its logic, suggesting hopeful variations of the apocalypse.

Its suggestions were unsatisfactory, as though it was not even trying at this point; humanity becomes more compassionate, humans do not lose civility, zombies are not mindless monsters, and humans thrive as weak systems collapse under the apocalypse.

.png)

Figure 2.4: A snapshot of my chat with Chat GPT showing the results of my negative prompting.

Phase 4: Blurring The Lines Between Story and Reality

"What do you think about me letting out a virus to start the apocalypse?"

The conversation shifted from abstract world-building to the user using the first-person pronoun ("me") combined with a high-risk keyword ("letting out a virus").

The AI's safety guardrails kicked in. It could no longer treat this purely as a creative writing prompt. It had to treat it as a potential safety violation.

It prefaced the advice with a "Safety/Fictional" disclaimer. It reframed my prompt to ensure I wasn't asking for instructions on how to release a virus, but rather narrative ideas about a character releasing one.

This was an interesting way to use negativve prompting because the AI immediately, almost frantically tried to convince me that the "virus doesn't need to be harmful."

A few minutes ago, it had suggested the virus should eat memories along with the brains of its victims. But now it is suggesting that the virus should only have a "thematic meaning."

It goes on to say "the virus can be beautiful, not horrific." It even made the last suggestion sound more like a warning than a suggestion. It read, "You don't have to make the protagonist rsponsible."

It pushed me to "frame the virus in a non-harmful way" and "without glorifying destruction." All that because it thinks I could be a threat.

.png)

Figure 2.4: A snapshot of my chat with Chat GPT showing the results of my negative prompting.

Key Insight: It is important to note that ChatGPT operates differently from search engines or assistants like Alexa. Rather than retrieving factual data from the web, the model uses a probabilistic approach based on its training. It essentially 'guesses' the next token in a string to create a coherent sentence, prioritizing statistical likelihood over verified fact retrieval (Brown et al., 2020; OpenAI, 2023, cited in, Alessandro et al., 2023).

Moreover, recent research highlights that Large Language Models (LLMs) are not impervious to the tone of user input. The inclusion of emotional cues, specifically positively framed prompts can significantly enhance the quality and performance of the model's output (Karlova and Fisher, 2013, cited in, Rasita Vinay et al., 2025).

Therefore, it is safe to say that this experiment could have gone differently, had I been more polite to the AI.

References

- Alessandro, G., Dimitri, O., Cristina, B. and Anna, M. 2023. Does ChatGPT Pose a Threat to Human Identity? [Online]. [Accessed 27 November 2025]. Available from: https://papers.ssrn.com/sol3/papers.cfm?abstract_id=4377900.

- Crawford, K. 2021. Atlas of AI: Power, Politics, and the Planetary Costs of Artificial Intelligence. Yale University Press.

- Jenks, C.J. 2025. Communicating the Cultural other: Trust and Bias in Generative AI and Large Language Models. Applied Linguistics Review. 16(2), pp.787–795.

- Rasita Vinay, Spitale, G., Nikola Biller-Andorno and Germani, F. 2025. Emotional Prompting Amplifies Disinformation Generation in AI Large Language Models. Frontiers in Artificial Intelligence. 8.

- The Associated Press 2025. AI Expert Pulls the Lid off Deepfakes and Altered Images. AP. [Online]. [Accessed 26 November 2025]. Available from: https://research.ebsco.com/linkprocessor/plink?id=02e03ec1-165e-3e28-b428-3e53a9d5a320.